How many edtech tools does a district have to manage before it becomes a swirl of confusion? K-12 districts access 2,400+ unique edtech tools every year on average—far beyond what most tech teams can manually manage.

And as districts increasingly look to justify every dollar spent, they’re asking: Which of these tools are actually making a positive impact on learning?

Administrators are tasked with creating environments that support positive student outcomes, demonstrating return on investment (ROI), and making informed budget decisions. Weighing the costs versus usage is one layer. Comparing cost and usage with student outcomes is the layer many want to unpack but aren’t sure how.

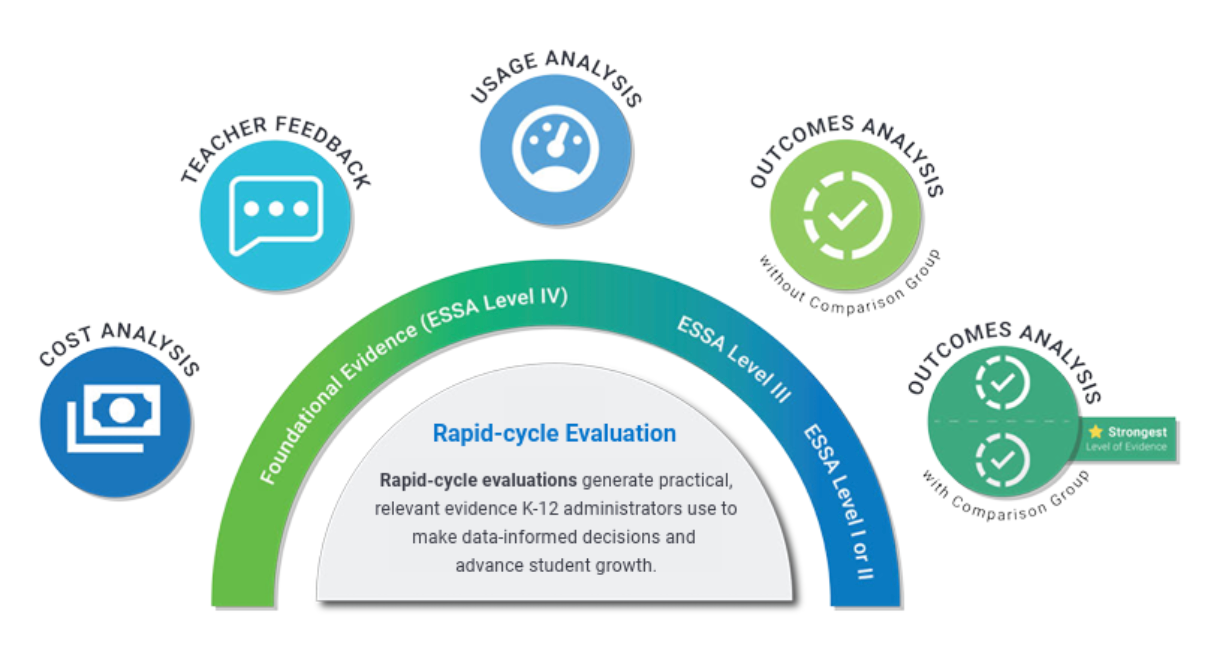

Rapid-cycle evaluation (RCE) is an iterative process for gathering evidence to help K–12 districts understand how tools are being used, what impact they are having, and what decisions should be made to support better education outcomes.

Instructure’s rapid-cycle evaluation engine helps local, regional, and state education agencies go beyond basic edtech usage metrics and dive deep into specific product outcomes, using usage, assessment results, and product cost data to draw real conclusions about what is moving the needle.

How rapid-cycle evaluation supports edtech decision-making

Here are 10 key questions that K–12 education leaders can answer through rapid-cycle evaluations run with Instructure’s research team.

Understand usage with rapid-cycle evaluation

Digging into usage on an edtech tool with rapid-cycle evaluation can provide valuable insights into what resonates with students and teachers, identify potential roadblocks to engagement, and uncover opportunities for additional staff training. These questions and related RCE findings serve as a starting point for deeper conversations about what the data is showing.

1. How much is an edtech tool getting used by students?

Rapid-cycle evaluation reveals real-world usage patterns across K–12 classrooms, schools, and grade levels. Gaining insight into which classrooms use a specific tool and how often it is used in different schools and classrooms gives leaders a first round of data to respond to and ask questions about.

2. Are students engaging with an edtech tool to the level recommended or intended?

RCE shows whether usage aligns with how the product was designed to support instruction. Anecdotal data from classroom teachers helps, but is greatly supported by data showing fidelity in using the tool as intended to achieve the outcomes it promotes.

3. Is an edtech tool getting used consistently across various student groups?

Rapid-cycle evaluation helps districts see whether access and engagement are equitable through different demographics and groups of learners.

Evaluate program effectiveness with rapid-cycle evaluation

Understanding usage is a great first step, but utilizing rapid-cycle evaluation to analyze outcomes is where district and school leaders can really gain the evidence needed to defend investments or change the relationship with a vendor. By pairing product usage data with an assessment outcome measure, education leaders gain visibility into what is moving the needle and get the information needed to make critical budget and instructional decisions.

4. Is the usage of an edtech tool demonstrating impact on student outcomes?

RCE connects engagement to learning results, so districts can see if edtech tools deliver on their promises and positively impact students. If students are interacting with the tool with fidelity, districts will be able to compare the expected outcomes with students' progress.

5. Is the usage of an edtech tool showing different impacts on student outcomes for different student groups?

Rapid-cycle evaluation highlights how effectiveness varies across populations, as districts can pose questions that lead to deeper dives into how progress compares between schools, classrooms, demographics, or groups of learners.

6. Is the cost of an EdTech tool justified by the results it is demonstrating?

RCE links learning gains to investment to support ROI-based decisions. Depending on your district's goals, investments, and budgets, different results will be judged adequate or not. But the RCE cycle allows for looking at a tool from multiple perspectives rather than trying to justify it with a single metric.

Optimize implementation and results with rapid-cycle evaluation

Just like curriculum and instructional strategies, edtech implementation is as nuanced and unique as the teachers and students who use the tools. Rapid-cycle evaluation can help you uncover those nuances and build plans toward continuous improvement by identifying best practices, professional development gaps, and implementation models that will likely work for future tools and programs.

7. What level of usage is driving the most impact on student outcomes?

RCE shows which engagement patterns lead to stronger learning gains. Vendors often provide recommended usage but it’s difficult for teachers and administrators to gather that data without a concerted effort.

8. Do certain student groups benefit more from the use of the product than others?

Rapid-cycle evaluation makes it possible to identify who benefits most, and begin conversations on why that might be and how to close the gap for other groups.

9. Can implementation models from buildings or classrooms using an edtech tool with particular success be replicated?

RCE reveals which strategies should be scaled, which is especially helpful in piloting programs. Leaders save teachers time and energy by identifying how to best roll out a program or tool and allow students across learning environments to benefit as equally as possible.

10. Are there opportunities for professional development in buildings or classrooms demonstrating lower usage or outcomes with an edtech tool?

Teachers do the best with the tools they’ve got. But rapid-cycle evaluation can pinpoint where training and coaching will have the greatest effect, so teachers can become more familiar with tools or programs, and students across classrooms and schools can make equitable progress.

Why rapid-cycle evaluation matters for edtech effectiveness

Without rapid-cycle evaluation, K–12 administrators may be able to see what edtech tools are being used. But it’s rare for districts to have the personnel or resources dedicated to knowing whether those tools deliver on their promises of learning gains. From the beginning of cost analysis to the data to look at how the tools impact outcomes, RCE provides the evidence needed to confidently know what works, improve what doesn’t, and make data-informed decisions about edtech investments.

FAQs

What is rapid-cycle evaluation?

Rapid-cycle evaluation (RCE) is a method for quickly measuring how edtech tools impact student learning using real classroom data. It involves an iterative process that includes district leaders, classroom teachers, and researchers. At its most effective, it consists of gathering data, examining it, and asking questions about it to inform the next cycle of data collection.

How does rapid-cycle evaluation help schools?

It helps districts justify spending, optimize implementation, and improve instructional effectiveness. Instead of opinions or anecdotal evidence, district leaders and school boards have definitive evidence on which tools are worth further investment, based on improved student outcomes and other metrics the district prioritizes.

What data is produced through rapid-cycle evaluation?

RCE uses product usage data, assessment results, and cost data.

Who uses rapid-cycle evaluation?

District leaders, curriculum teams, and even teachers can make up the edtech decision-making team that uses RCE to guide technology investments and instructional strategy. This flows outward to benefit classroom teachers, who can receive more targeted professional development or assurance that a tool is working, and students, who can receive the best instruction possible with the tools the district uses.